In the rapidly evolving field of machine learning, optimization methods play a pivotal role in enhancing model performance and reliability. Sharpness-Aware Minimization (SAM) emerges as a groundbreaking approach, focusing on refining these models by carefully considering the loss landscape’s sharpness. This innovative strategy aims to improve generalization capabilities, ensuring models perform effectively on unseen data. As we explore SAM’s impact on machine learning frameworks, its unique methodology offers a promising avenue for developing robust and reliable neural networks.

Understanding SAM in Machine Learning

In the evolving landscape of machine learning, various optimization methods have emerged, each with a unique approach to improving the performance and reliability of neural networks. Among these, Sharpness-Aware Minimization (SAM) represents an innovative shift towards understanding and refining the optimization process. SAM introduces a focused strategy aimed at enhancing the generalization capabilities of machine learning models by meticulously examining the sharpness of the loss landscape, a factor intrinsically linked to a model’s ability to perform well on unseen data.

Neural networks learn by adjusting their parameters in response to data. The conventional method involves minimizing a loss function that measures the difference between the predicted and actual outputs. However, achieving a low loss on training data doesn’t necessarily guarantee that a model will perform well on new, unseen data. This discrepancy between training performance and real-world application has prompted researchers to explore techniques that can bridge this gap, with SAM emerging as a promising solution.

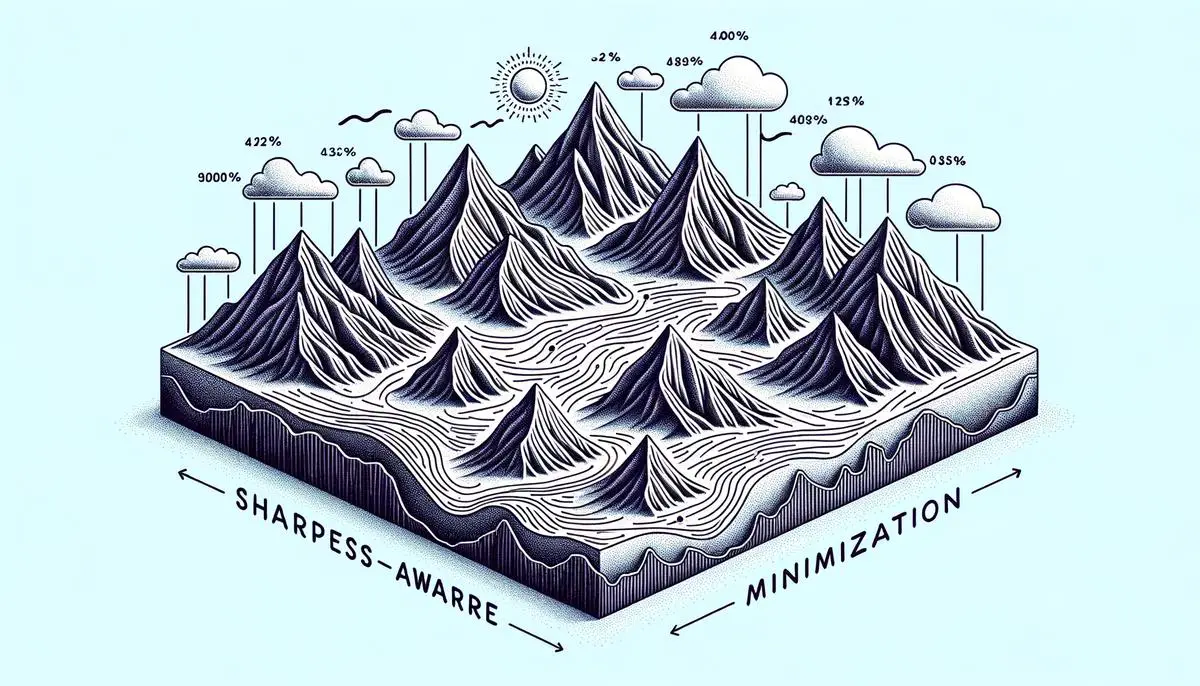

The foundational principle of SAM lies in its novel approach to the optimization process. Instead of solely focusing on lowering the training loss, SAM also takes into account the sharpness of the loss landscape, an area lesser explored in traditional optimization methods. In machine learning parlance, the sharpness pertains to how rapidly the loss value escalates as one deviates from the minimum point in multiple directions. A sharper loss landscape suggests that a slight change in model parameters could significantly increase the loss, indicating a model that may perform well on the training set but has poor generalization to new data. Conversely, models situated in flatter regions of the loss landscape are generally observed to generalize better.

How SAM Functions within Machine Learning Frameworks

SAM enriches the training process by implementing a two-step procedure to update neural network weights. Initially, it seeks directions in the parameter space that significantly increase the loss — essentially probing for sharp regions in the optimization landscape. This step involves a perturbation of the model parameters, albeit temporarily, in the direction of higher loss gradients. By assessing the impact of these perturbations, SAM is capable of identifying sharp minima.

Following this exploration, SAM focuses on optimizing the modified objective, which amalgamates the original loss with additional consideration for sharpness. In essence, it directs the model towards parameter updates that not only minimize the actual loss but also steer clear from sharp minima, favoring flatter regions that are synonymous with better generalization characteristics. This dual-focus optimization strategy is indicative of SAM’s pursuit of a more holistic improvement beyond mere loss reduction, aiming at robust performance across varied datasets.

Implications and AdvantagesThe introduction of SAM into machine learning frameworks revolutionizes how models are trained and optimized. By appending sharpness-awareness to the optimization crusade, SAM indirectly boosts models’ capability to generalize from training to unseen data, a cornerstone for effective machine learning applications. This optimization method’s implications extend across various domains, promising enhanced performance and reliability in AI-driven systems.

Moreover, SAM’s utility is not confined to specific types of neural networks but spans convolutional and recurrent networks alike, attesting to its versatile applicability in machine learning tasks from image recognition to sequential data processing. Its integration into training routines can be accomplished with minimal adjustments, making it an accessible and highly beneficial enhancement for existing and future machine learning pipelines.

In conclusion, Sharpness-Aware Minimization (SAM) introduces a nuanced perspective to neural network training by highlighting the significance of the loss landscape’s shape in model generalization. Its methodology emphasizes not just minimizing loss but doing so in a manner that consciously avoids sharp minima, promoting better performance on new, unseen data. As AI and machine learning continue to permeate various sectors, techniques like SAM play a pivotal role in refining and ensuring the robustness and reliability of these technologies.

Theoretical Foundations of SAM

Delving into the conceptual bedrock of Sharpness-Aware Minimization (SAM) in machine learning demands an understanding of its underpinning facets: the intricacies of loss landscapes in neural networks, the quintessence of model generalization, and SAM’s nuanced stance on the issue of sharp minima. This exploration seeks to dissect these foundational elements to elucidate how they collectively influence the innovative trajectory of SAM, guiding its mission to enhance machine learning models.

At its core, the loss landscape in neural networks is a conceptual mapping that charts the performance variations of a model in response to different weight configurations. Imagine traversing a multidimensional terrain where each coordinate represents a unique setting of model weights and the elevation correlates with the loss, or error, produced by employing those weights. Certain regions—valleys and basins—denote low-loss areas where models closely predict outcomes. Within this metaphorical landscape, minima—points of lowest loss—become paramount landmarks, signaling optimal model weight configurations.

The aim is to locate not just any minima but those fostering model generalization—the capability of a machine learning model to perform adeptly on unseen data. Generalization underlines a model’s utility beyond its training dataset, a cornerstone for its application in real-world contexts. Historically, the quest was to identify and assert positions in global minima—believed to hold the power for superior generalization. However, this journey uncovers a differentiated landscape: sharp and wide minima.

Sharp minima are akin to narrow valleys with steep inclines; though they may attain low training loss, they exhibit a sensitivity to weight perturbations, often translating into poor generalization on unseen data. Conversely, wide minima, resembling expansive valleys with gentler slopes, suggest metrics that fair better in terms of generalization, thanks to their inherent stability against minor weight shifts. This distinction casts a spotlight on the delicate balance between achieving low training loss and maintaining robust performance on new data—a balance SAM aspires to achieve.

SAM engages with this complex terrain through a pioneering lens, highlighting the significance of addressing sharp minima. It adopts a two-stranded approach: initially estimating the worst-case loss increase introduced by perturbing model weights, thereby illuminating the sharpness of the local minima. Subsequent to this revelation, SAM navigates towards a direction that reduces both the identified peak loss and encourages movement towards wider, more generalization-friendly minima.

The algorithm’s quest does not merely rest upon locating wide minima but embarks on a cultivated path that discernibly improves model generalization. By consciously avoiding the pitfalls of sharp minima, SAM sets the stage for neural networks that stand robust against the variance of practical applications.

This endeavour towards bolstering model utility through refined optimization techniques underpins SAM’s conceptual framework. It contributes a compelling narrative to the optimization theater in machine learning—highlighting that the journey towards efficacious neural networks transcends the simplistic ambition of minimizing training loss. Instead, it embraces a holistic view, contemplating the nuanced dynamics between loss landscapes, minima characteristics, and the overarching aspiration for unassailable model generalization.

Taken together, these theoretical underpinnings form a cohesive blueprint for SAM, guiding its strategy in refining machine learning models. By intertwining an intricate understanding of loss landscapes with a focused objective to conquer sharp minima, SAM heralds a pragmatic approach aimed at escalating the capabilities of neural networks, ensuring their readiness for the rigorous demands of real-world applications.

Implementing SAM in Neural Networks

Implementing SAM in Real-World Machine Learning Projects

The integration of Sharpness-Aware Minimization (SAM) into machine learning projects offers a pathway to enhanced model performance and generalization capabilities. This segment discusses how to efficiently apply SAM within neural network architectures, providing a clear guide for developers aiming to incorporate this optimization method into their machine learning projects.

Initial Steps before Applying SAM

Before integrating SAM into your machine learning workflow, it’s crucial to establish a solid foundation. This requires an understanding of your neural network’s architecture and ensuring that your machine learning environment is configured with the necessary programming frameworks and libraries which support SAM, such as TensorFlow or PyTorch.

Adjusting the Training Process

Incorporating SAM involves modifying the traditional training process of neural networks. Conventionally, training a neural network focuses on reducing the loss by adjusting weights directly based on gradients. SAM introduces an intermediary step aimed at considering the sharpness of the loss landscape around the current weights.

- Compute the Gradient: Initially, compute the gradient with respect to the model’s parameters on the current batch of data. This step is similar to standard training practices.

- Identify Sharpness: Next, adjust the weights temporarily in the direction that maximizes the loss within a certain neighborhood. This step helps identify regions of sharp minima, which are less desirable for model generalization.

- Update Weights Strategically: Finally, compute gradients again at this new position but update the model’s actual weights in the opposite direction. This ensures that the updates prioritize flatter areas of the loss landscape which contribute to better generalization.

This modification requires careful tuning of hyperparameters such as the learning rate and the neighborhood size for examining sharpness, both crucial for SAM’s effectiveness.

Practical Tips for Developers

- Understand the nuances: Grasping the theoretical foundation behind SAM and its practical implications on neural network training will guide better implementation choices.

- Hyperparameter Tuning: Start with recommended settings for SAM from existing literature or guides, but be prepared to adjust based on your specific model architecture and dataset.

- Regular Evaluation: It’s essential to monitor the performance and behavior of your model during training. Integrate validation steps to ensure that the incorporation of SAM is indeed improving model generalization without excessively prolonging the training phase.

- Leverage Community and Tools: Make use of available tools and libraries that support SAM integration. Networking with the machine learning community for insights and potential optimizations can uncover advanced strategies to maximize SAM’s benefits.

Incorporating SAM into neural network projects doesn’t have to be intimidating. With a structured approach, understanding of its principles, and adjustment to the training process, developers can utilize it to push forward the performance and generalization capabilities of machine learning models efficiently. The key is to iteratively experiment with its implementation, leveraging foundational knowledge and community resources to navigate potential challenges in optimizing neural networks with SAM.

Comparative Analysis of SAM and Traditional Optimization Techniques

Comparing SAM to Traditional Optimization Techniques in Machine Learning: A Deeper Look

In the dynamic world of machine learning, the introduction of Sharpness-Aware Minimization (SAM) has sparked considerable interest among practitioners and researchers alike. Given the diverse array of optimization techniques such as Stochastic Gradient Descent (SGD) and Adam, how does SAM stack up? This examination aims to offer a comprehensive comparison, highlighting both the strengths and limitations of SAM based on empirical studies and real-world applications, thereby providing a balanced viewpoint on its place within the spectrum of available optimization methods.

Stochastic Gradient Descent (SGD) and Adam have long been staple optimization algorithms in the machine learning community. SGD, known for its simplicity and effectiveness, has been instrumental in training a wide variety of models. It works by updating the model parameters in the direction of the negative gradient of the loss function, thereby minimizing it. Despite its proven success, SGD can sometimes be slow to converge, particularly in complex loss landscapes fraught with local minima.

In contrast, Adam (short for Adaptive Moment Estimation) extends upon the principles of SGD, incorporating momentums and adaptive learning rates to accelerate convergence. This approach allows Adam to adjust more intelligently to the geometry of the data, making it highly effective for large datasets and complex models. However, one critique of Adam is that it may lead to overfitting on the training data due to its fast convergence speed, thereby affecting model generalization.

SAM introduces a nuanced strategy in optimization, as it specifically aims at improving model robustness and generalization by focusing on the sharpness of the minima in the loss landscape. This is predicated on the insight that flatter minima tend to lead to models that generalize better on unseen data—a principle that neither SGD nor Adam specifically targets. SAM seeks to find weight parameters that reside in regions with smoother loss surfaces by taking an extra step in each update to estimate and mitigate sharpness. This inherently addresses some of the pitfalls associated with traditional optimization techniques like SGD and Adam, particularly in tasks where generalization is paramount.

The empirical support for SAM’s effectiveness comes from various studies and applications across a breadth of tasks. Research has shown that models optimized with SAM demonstrate superior generalization capabilities, especially in tasks that are susceptible to overfitting or where the quality of data is variable.

However, this enhanced performance does not come without its trade-offs. The chief among these is the increased computational cost attributed to SAM’s two-step update process. This extra step effectively doubles the computational workload, making it more resource-intensive compared to SGD and Adam. The second consideration with adopting SAM is its sensitivity to hyperparameter settings. Practitioners need to carefully tune SAM’s hyperparameters to balance between optimization and not excessively penalizing sharpness, which could halt progress towards truly optimal minima.

While SAM represents a significant advancement in optimization strategies for machine learning, integration into projects should be meticulously considered. For scenarios where model generalization is critical and computational resources are not a limiting factor, SAM provides a compelling option beyond traditional techniques like SGD and Adam. However, for projects with stringent computational constraints or those where rapid iterations are necessary, SGD and Adam, with their proven track records and less intensive computational demands, may remain preferable.

In conclusion, the introduction of SAM adds a valuable tool to the machine learning practitioner’s arsenal, particularly in addressing complex challenges around model generalization and robustness. As with any optimization technique, the choice between SAM, SGD, Adam, or any other method should be informed by specific project requirements, goals, data characteristics, and resources available. Continuous exploration, combined with empirical validation, will remain crucial as the machine learning landscape evolves, ensuring that optimization techniques keep pace with the growing complexity and diversity of applications.

Challenges and Limitations of SAM

Diving deeper into Robustness and Generalization: The Challenges and Limitations of SAM in Machine Learning

As we explore the intersection of optimization techniques in machine learning, Sharpness-Aware Minimization (SAM) enters as a potent player, designed to navigate the intricate loss landscapes neural networks present. SAM, remarkable for its visionary approach in seeking out wide minima to enhance model generalization, is met with both enthusiasm and scrutinous examination within the tech community. The method, sophisticated in nature, aims to push the boundaries of how neural networks learn and adapt, targeting enhanced performance across diverse scenarios. Yet, for all its nuanced aspirations, SAM navigates a domain fraught with challenges and nuances that merit a closer inspection.

A significant challenge encountered with SAM implementation is the computational overhead it introduces. Compared to traditional optimization techniques such as Stochastic Gradient Descent (SGD) or Adam, SAM’s unique two-step process calls for additional computational resources. This involves first computing the gradient for weight parameters, followed by an identification of sharpness to ultimately update the weights strategically. This dual-step approach, while beneficial in sculpting models that generalize better, undeniably demands more in terms of computational effort and time. For projects on a tight computational budget or those that necessitate rapid deployment, this added complexity invites deliberation on cost-benefit trade-offs.

Adjusting the intricate parameters within SAM adds another layer of complexity. Fine-tuning these parameters requires a deep understanding of the model’s architectural nuances and the nature of the data it-ingests. Lack of a one-size-fits-all solution means developers often embark on a trial-and-error journey to identify optimal settings. Given the sensitive nature of SAM’s performance to these hyperparameters, striking the perfect balance becomes critical yet challenging. Novices in machine learning or developers constrained by project timelines might find this intricate tuning process particularly daunting.

Moreover, SAM’s universal applicability comes into question across various scenarios. While its contributions to enhancing model generalization are well acknowledged, the reality is that SAM may not always produce significant benefits. For problems where data is inherently simplistic or when models do not suffer from overfitting issues, the benefits of integrating SAM might not be as pronounced. Techniques such as data augmentation or simpler regularization methods could potentially offer comparable gains without SAM’s involved setup and computational cost.

Industry experts and academic researchers shed light on these limitations through rigorous evaluation. Studies focused on comparative analyses between models optimized with SAM versus traditional methods reveal the scenarios where SAM shines and also outline its relative inefficacies. It becomes evident that while SAM proposes a compelling approach to model optimization, its utility is modulated by the specific challenges and requirements of the project at hand.

Insights from these scholarly collations and empirical evaluations guide developers navigating the intricacies of SAM. The overarching advice leans towards a nuanced understanding of SAM’s potential and limitations. While SAM offers a strategic method of optimization that can lead to more robust and generalizable neural network models, awareness and careful consideration of its computational overhead, tuning complexity, and scenario-specific payoff are vital. Thus, while SAM heralds a progressive step in machine learning optimization techniques, it joins a suite of tools whose optimal application requires judicious selection and thoughtful implementation.

Future Directions for SAM in Machine Learning

As we peer into the horizon of machine learning, there’s one term that’s beginning to echo a bit louder than the rest: Sharpness-Aware Minimization (SAM). With SAM stirring interest and curiosity in the domain of machine learning optimization, its trajectory brims with potential innovations and challenges alike. Here, we delve into what the future may hold for SAM in machine learning, touching upon exciting research destinations and areas ripe for improvement.

Research Directions for SAM:

- Integration with Emerging Technologies:

A compelling trajectory for SAM is its integration with burgeoning machine learning techniques and technologies. Imagine uniting SAM’s prowess in ensuring robust generalization with the dynamic adaptability of Reinforcement Learning (RL) or the profound generative capabilities of Generative Adversarial Networks (GANs). The fusion could pioneer models with enhanced adaptability and creativity while significantly boosting their generalization across unseen scenarios.

- Enhanced Computational Efficiency:

Despite SAM’s promising results, it’s no secret that its bespoke two-step optimization process demands additional computational resources. Future research could lean towards the development of more computationally efficient variants of SAM, possibly by refining the sharpness evaluation step or optimizing the update mechanism to reduce computational overhead without compromising the end benefits of the optimization process.

- Broadened Applicability and Flexibility:

Though SAM has shown promise across different neural network architectures, its universal applicability remains a puzzle piece that is yet to perfectly fit. Investigating how SAM behaves in a wider variety of contexts, especially in less conventional or emerging neural network designs, could be quite enlightening. Beyond flexibility, fine-tuning SAM for specific tasks or industry applications could lead to bespoke optimization strategies, enhancing performance and user experience in niche areas.

- Tackling the Hyperparameter Sensitivity:

Another avenue for enhancement lies in addressing SAM’s sensitivity to hyperparameters. Research focused on developing guided, adaptive, or even autonomous processes for hyperparameter selection could mitigate one of SAM’s considerable hurdles. This approach would not only ease the use of SAM for practitioners but could also unlock its full potential by ensuring optimal parameter settings across different scenarios.

- Empirical Foundations and Theoretical Underpinnings:

SAM’s journey would greatly benefit from more empirical studies and theoretical investigations aimed at understanding its fundamental mechanics and limitations more deeply. This pursuit includes exploring the mathematical underpinnings of why SAM improves generalization and identifying edge cases where SAM’s advantages may diminish. Such foundational insights could prop open doors to methodical refinements and ensure SAM’s claims are solidly anchored in empirical proof and theoretical logic.

- Automatic Adaptation Mechanisms:

Looking further ahead, an enthralling research direction could focus on imbuing SAM with self-adaptive mechanisms. These would allow SAM-equipped models to dynamically adjust their optimization strategy based on real-time feedback regarding model performance and generalization. This direction not patrons a stride towards more autonomous machine learning systems but also emboldens models to navigate the ever-changing flood of data they learn from.

Conclusion:

Venturing into the future with SAM cradles the promise of machine learning models that are not just intricate in their functionalities but robust and reliable in the face of diverse and evolving data landscapes. Each step forward is a stride towards optimizing models that empower technologies and industries across the spectrum.

As we stand at the forefront of optimizing machine learning models, Sharpness-Aware Minimization (SAM) represents a significant leap forward in our quest for excellence. By emphasizing the importance of flat minima and steering clear of sharp ones, SAM not only enhances model generalization but also sets new benchmarks for model reliability across various applications. This focus on optimizing through an understanding of loss landscape sharpness heralds a new era in machine learning development—ushering in more sophisticated and adaptable models ready to meet the challenges of tomorrow’s data-driven world.