Meta is expanding the horizons of image segmentation with a billion masks and 11 million images with the Segment Anything project!

The Segment Anything (SA) project is an innovative breakthrough in the field of image segmentation, offering a new task, model, and dataset. With the development of the largest segmentation dataset to date (boasting over one billion masks and 11 million images), the project offers a comprehensive solution to the challenges of image segmentation.

Introduction to the Segment Anything Project

At the core of the SA project lies the Segment Anything Model (SAM), which is promptable, allowing it to transfer zero-shot to new image distributions and tasks. Our model has demonstrated impressive zero-shot performance in various tasks, often outperforming or matching the results of prior supervised models. The corresponding dataset, SA-1B, is accessible at https://segment-anything.com, serving as a resource for foundation models in computer vision research.

Segment Anything Project Key Components: Task, Model, and Data

The success of the SA project is built upon three key components: task, model, and data. To develop these, we address the following questions about image segmentation:

- What task will enable zero-shot generalization?

- What is the corresponding model architecture?

- What data can power this task and model?

Developing a Promptable Segmentation Task

In response to these questions, we have developed a promptable segmentation task, which allows for powerful pre-training and a wide range of downstream applications. The SAM architecture supports flexible prompting, real-time segmentation mask output, and ambiguity awareness, meeting the requirements of real-world use. To train SAM, we have built a diverse, large-scale data engine that iterates between using our efficient model to assist in data collection and using the newly collected data to improve the model.

Our final dataset, SA-1B, contains over one billion masks from 11 million licensed and privacy-preserving images, making it 400 times larger than any existing segmentation dataset. We believe that SA-1B will become a valuable resource for research aiming to build new foundation models.

We also address responsible AI by studying and reporting on potential fairness concerns and biases when using SA-1B and SAM. Images in SA-1B span a geographically and economically diverse set of countries, and SAM’s performance is consistent across different groups of people. We hope that our work contributes to a more equitable real-world application of AI technologies.

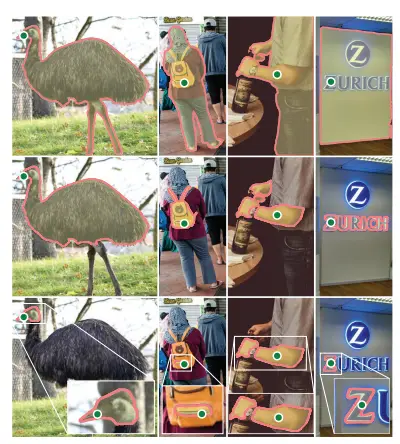

Extensive evaluation of SAM has shown consistently strong quantitative and qualitative results in various downstream tasks under a zero-shot transfer protocol using prompt engineering. These results suggest that SAM can be effectively used out-of-the-box with prompt engineering to solve a variety of tasks involving object and image distributions beyond SAM’s training data. However, there is still room for improvement, which we discuss in our analysis.

Meta is releasing the SA-1B dataset for research purposes and making SAM available under a permissive open license (Apache 2.0) at https://segment-anything.com. Additionally, we showcase SAM’s capabilities with an online demo, allowing users to experience the power of this revolutionary model in action.

Introduction to the Versatile Segmentation Task of Segment Anything Project

Taking inspiration from NLP, this article explores the creation of a foundation model for segmentation. The goal is to define a task with comparable capabilities to those found in NLP.

The Promptable Segmentation Task

The idea of a prompt is translated from NLP to segmentation. A prompt can be a set of foreground/background points, a rough box or mask, free-form text, or any information indicating what to segment in an image. The goal is to return a valid segmentation mask based on any given prompt. A “valid” mask means that, even when a prompt is ambiguous, the output should be a reasonable mask for at least one of the objects.

Pre-training Algorithm and Zero-shot Transfer

The promptable segmentation task leads to a natural pre-training algorithm that simulates a sequence of prompts for each training sample and compares the model’s mask predictions against the ground truth. This approach ensures that a pre-trained model is effective in use cases involving ambiguity. By developing the ability to respond appropriately to any prompt at inference time, the model can be adapted to various downstream tasks via prompt engineering.

Related Segmentation Tasks and Task Generalization

Segmentation is a broad field, encompassing interactive segmentation, edge detection, super-pixelization, object proposal generation, foreground segmentation, semantic segmentation, instance segmentation, panoptic segmentation, and more. The promptable segmentation task aims to produce a broadly capable model that can adapt to many existing and new segmentation tasks through prompt engineering, a form of task generalization.

The Segment Anything Model (SAM)

The Segment Anything Model (SAM) is designed for promptable segmentation. SAM has three components: an image encoder, a flexible prompt encoder, and a fast mask decoder. Built on Transformer vision models, SAM features specific tradeoffs for real-time performance.

Image Encoder

The image encoder is based on a pre-trained Vision Transformer (ViT) adapted to process high-resolution inputs. It runs once per image and can be applied before prompting the model.

Prompt Encoder

The prompt encoder considers sparse (points, boxes, text) and dense (masks) prompts. Points and boxes are represented by positional encodings, while free-form text is encoded with an off-the-shelf text encoder. Dense prompts (masks) are embedded using convolutions and summed element-wise with the image embedding.

Mask Decoder

The mask decoder maps the image embedding, prompt embeddings, and an output token to a mask. To resolve ambiguity, the model predicts multiple output masks for a single prompt. The model predicts a confidence score for each mask, ranking them according to the estimated IoU.

Efficiency and Training

The overall model design is motivated by efficiency. With a precomputed image embedding, the prompt encoder and mask decoder run in a web browser on a CPU in approximately 50ms. The model is trained for the promptable segmentation task using a mixture of geometric prompts.

Segment Anything Project RAI Analysis

Due to the scarcity of segmentation masks online, the Segment Anything Data Engine was developed to facilitate the collection of a 1.1-billion-mask dataset called SA-1B. The data engine comprises three stages: (1) model-assisted manual annotation, (2) semi-automatic annotation involving a mix of auto-predicted masks and model-assisted annotation, and (3) a fully automatic stage where the model generates masks independently.

In the first stage, professional annotators used a browser-based interactive segmentation tool, SAM, to label masks by selecting foreground and background points. Annotators were not restricted by semantic constraints and labeled a wide range of objects. As the model improved, the average annotation time per mask reduced from 34 to 14 seconds. This stage produced 4.3 million masks from 120,000 images.

During the semi-automatic stage, the objective was to increase mask diversity and improve the model’s ability to segment any object. Annotators focused on less prominent objects, and an additional 5.9 million masks were collected from 180,000 images. The average annotation time increased to 34 seconds due to the complexity of the objects.

In the final, fully automatic stage, the model had undergone two significant enhancements. First, enough masks had been collected to improve the model substantially. Second, the ambiguity-aware model allowed the prediction of valid masks even in ambiguous cases. The model was applied to all 11 million images in the dataset, resulting in a total of 1.1 billion high-quality masks.

The SA-1B dataset consists of 11 million diverse, high-resolution, licensed, and privacy-protected images and 1.1 billion high-quality segmentation masks. These images were sourced from a provider that works directly with photographers, ensuring high-resolution quality. Faces and vehicle license plates have been blurred for privacy protection.

The data engine produced 1.1 billion masks, 99.1% of which were generated automatically. A random sample of 500 images was taken to evaluate mask quality, with 94% of the automatically generated masks having a greater than 90% intersection over union (IoU) with professionally corrected masks.

The SA-1B dataset offers 11 times more images and 400 times more masks than the second-largest existing segmentation dataset, Open Images. The mask properties of SA-1B are broadly similar to other datasets in terms of concavity distribution, suggesting that it offers a robust solution for computer vision foundation models.

Discussion on the Segment Anything Project: Introducing Promptable Segmentation and the SAM Model

Foundation Models in Machine Learning

Pre-trained models have been utilized for downstream tasks in machine learning since the early days, and the importance of such models has increased in recent years due to the emphasis on scale. These models have been rebranded as “foundation models” because they are trained on broad data at scale and can be adapted to a wide range of downstream tasks. However, it is important to note that a foundation model for image segmentation represents only a fractional subset of computer vision.

SAM Model and Compositionality

The SAM model introduced by the Segment Anything Project aims to create a reliable interface between SAM and other components by predicting a valid mask for a wide range of segmentation prompts.

This feature enables SAM to power new capabilities beyond ones imagined at the moment of training, similar to how CLIP is used as a component in larger systems such as DALL·E. SAM’s ability to generalize to new domains like ego-centric images makes it suitable for new applications without the need for additional training.

Limitations of SAM

While SAM performs well in general, it is not perfect. It can miss fine structures and hallucinate small disconnected components at times. Also, SAM’s overall performance is not real-time when using a heavy image encoder. Furthermore, SAM is designed for generality and breadth of use rather than high IoU interactive segmentation. Although SAM can perform many tasks, it is unclear how to design simple prompts that implement semantic and panoptic segmentation.

Conclusion and Acknowledgments about Segment Anything Project

The Segment Anything Project introduces a new task (promptable segmentation), model (SAM), and dataset (SA-1B) that make image segmentation in the era of foundation models possible. T

he perspective of this work, the release of over 1B masks, and the promptable segmentation model will pave the path ahead for future research in this field. The authors acknowledge the valuable contributions of their colleagues in scaling the model, developing the data annotation platform, and optimizing the web version of the model.

Check the full research paper here. Also check out our article about Web Stable Diffusion.

Try Segment Anything Demo

Prior to starting, please note that this is a research demo and should not be used for any commercial purposes. Uploaded images will only be utilized to showcase the Segment Anything Model and will be deleted at the end of the session, including any data obtained from them. It is crucial to ensure that any images uploaded comply with both intellectual property rights and Facebook’s Community Standards.

The SAM model, developed by Meta AI, is a novel AI system capable of “cutting out” any object within an image using a single click. This promptable segmentation model offers zero-shot generalization to unfamiliar images and objects without the need for additional training, making it highly adaptable and versatile.

Frequently Asked Questions (FAQ) about Segment Anything (SAM)

1. What type of prompts are supported by the SAM model?

The SAM model supports a variety of input prompts such as specifying objects to segment in an image, using interactive points and boxes, and automatically segmenting everything in an image.

2. What is the structure of the SAM model?

SAM is designed to be efficient and flexible with a one-time image encoder and a lightweight mask decoder that can run in a web-browser in just a few milliseconds per prompt.

3. What platforms does the SAM model use?

SAM can take input prompts from other systems, and its mask outputs can be used as inputs to other AI systems.

4. How big is the SAM model?

The size of the SAM model is not specified in the provided information.

5. How long does inference take for the SAM model?

The inference time for the SAM model is not specified in the provided information.

6. What data was used to train the SAM model?

The SAM model was trained on millions of images and masks collected through the use of a model-in-the-loop “data engine.”

7. How long does it take to train the SAM model?

The training time for the SAM model is not specified in the provided information.

8. Does the SAM model produce mask labels?

Yes, the SAM model produces segmentation masks as output.

9. Does the SAM model work on videos?

It is not clear from the provided information if the SAM model can work on videos.

10. Where can I find the code for the SAM model?

The code for the SAM model can be found on the Meta AI Github page.

Acknowledgements:

The SAM model was developed by the Meta AI research team, including Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, and Ross Girshick. Many others contributed to the project, and their names can be found on the project’s page.